MERIDIAN is working with JASCO to design a tool for visualizing underwater acoustic data with a directional component that indicates bearing for use by biologists and other researchers. This system will visualize data collected from hydrophone arrays that are capable of discerning the bearing of sound sources, which can help users distinguish between animals and track movement of vessels and wildlife. Where acoustic data is normally presented to biologists in the form of 2D Time versus Frequency spectrograms which use colour to indicate intensity, this system must also present the directional information in a way that is intuitive for the user. Experimentation with directional colours and filtering techniques has shown promise, but we are investigating solutions that take advantage of interaction, 3-dimensional views, and accompanying Time versus Direction plotting and animations. This system will allow biologists to analyze, classify, and annotate data, and to prepare reports for presentation.

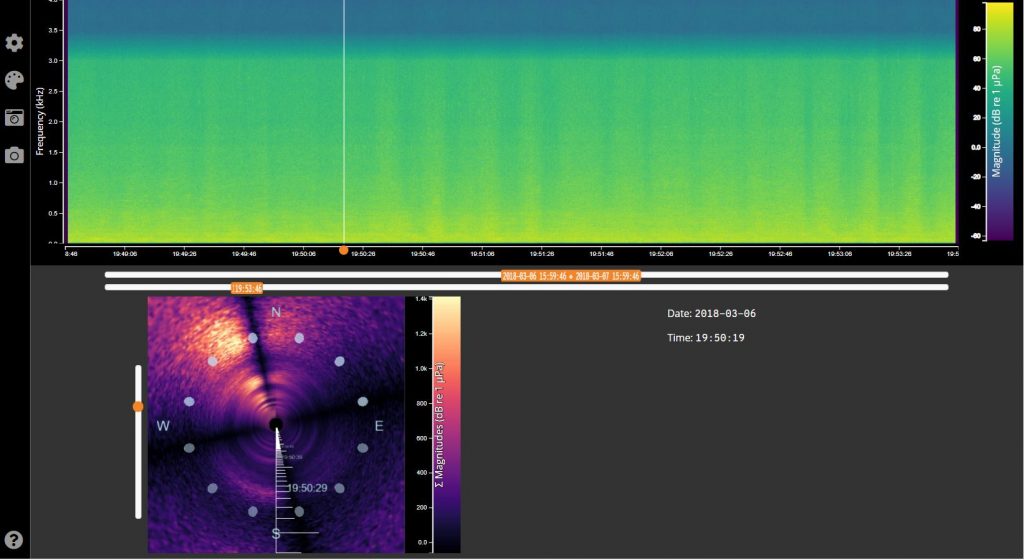

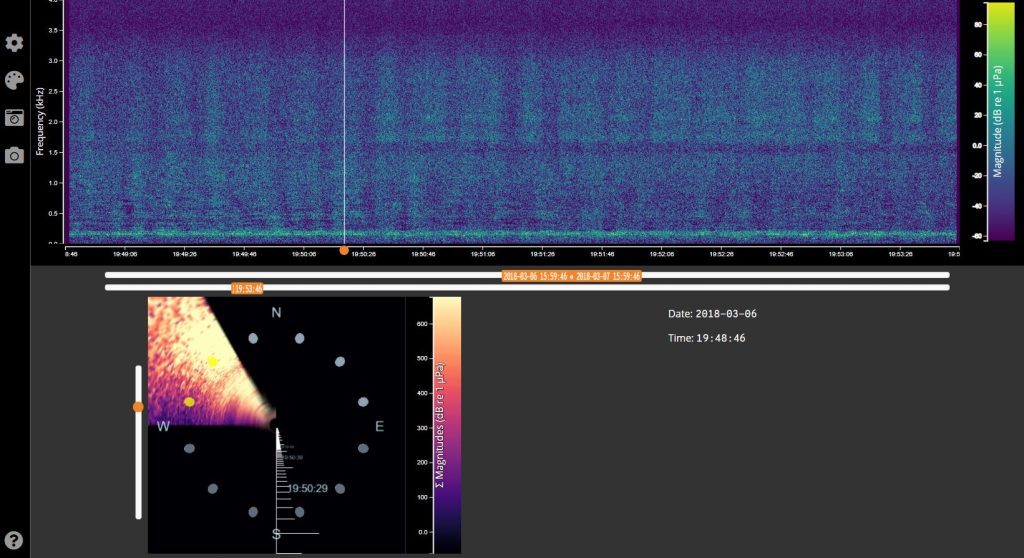

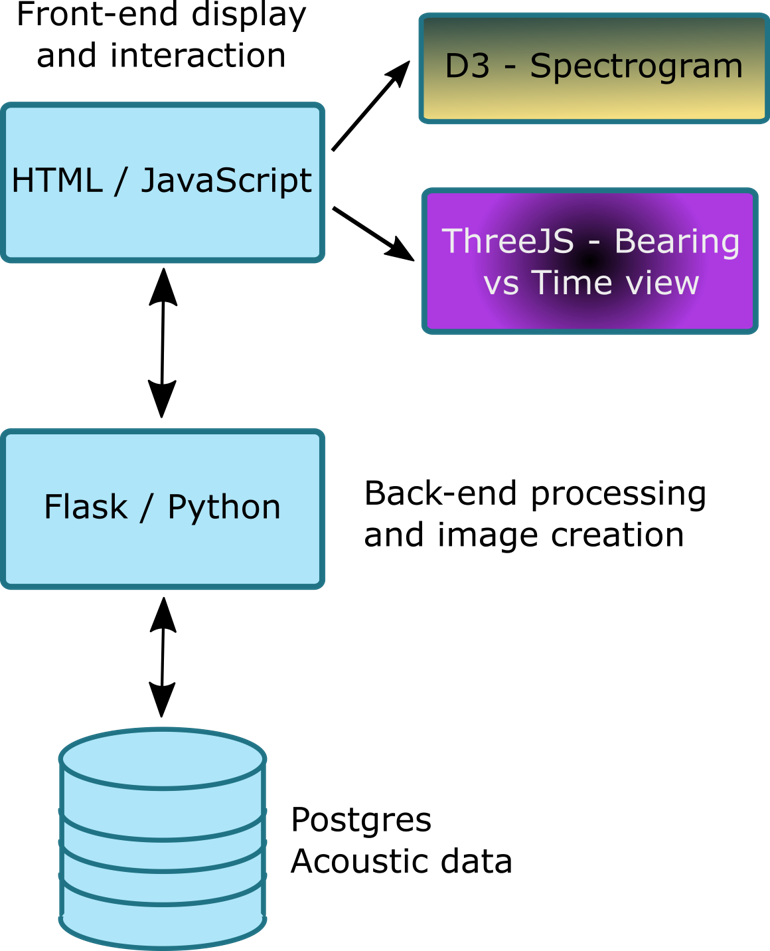

The application visualizes acoustic data for the web in a traditional spectrogram viewer built with D3. Using ThreeJS, a secondary view provides a 3D Time vs Bearing plot. In this plot, magnitudes associated with each bearing at each timestep are summed, indicating the directionality of sound sources. This plot allows the user to filter according to specific bearing angles and update the corresponding spectrogram with only sound associated with those bearings. All calculations are done in the back-end with Flask and Python. Users can adjust time and frequency spans, filter according to bearing angle, and download snapshots of both views. Users can also drag back and forth along the spectrogram’s time axis to move the camera’s position in the 3D viewer.

Screenshots of the spectrogram and 3D views (left), and a spectrogram filtered for bearing angle (right).

The machine learning portion of this project will investigate if directional acoustic data alone can be used to estimate the accurate position (range and bearing) of a sound source using Automatic Identification System (AIS) satellite data to generate the training data set for a fixed mooring. Research between 2017 and 2019 suggests that sound source localization using acoustic data alone is possible using neural networks [1][2][3].

[1] H. Niu, E. Ozanich, P. Gerstoft, “Ship localization in Santa

Barbara Channel using machine learning classifiers”, The Journal of the

Acoustical Society of America 142, EL455, 2017, https://doi.org/10.1121/1.5010064

[2] Wang, Y. Peng, H. “Underwater acoustic source localization using

generalized regression neural network”, The Journal of the Acoustical

Society of America 143, 2018, https://doi.org/ 10.1121/1.5032311

[3] Niu, H. Zaixiao, G. Ozanich, E. Gerstoft, P. Haibin, W. Zhenglin,

L. “Deep learning for ocean acoustic source localization using one

sensor”, Journal of the Acoustical Society of America, 2019,[Online].

Available: https://arxiv.org/abs/1903.12319, [Accessed: April 7, 2019]