An application that enables human analysts and artificial neural networks to work together on the task of analyzing large marine acoustic data sets…

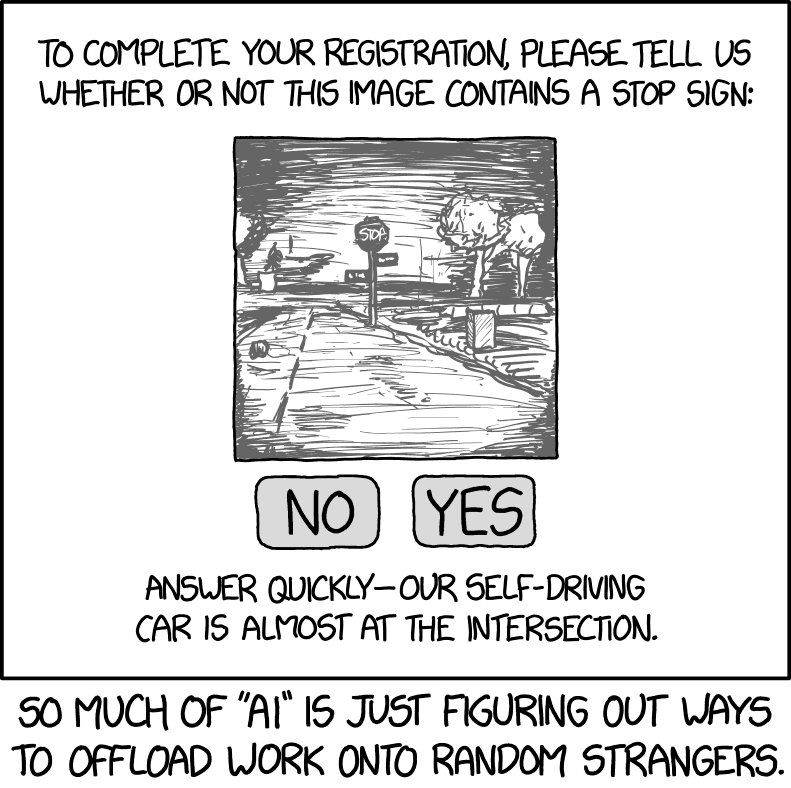

Self Driving by xkcd.com

Passive acoustic monitoring (PAM) is a highly effective and cost-efficient method widely used in ecology to study animals in terrestrial and marine environments, allowing valuable data to be collected over long time periods with minimal effort. Such recorded “soundscapes” have important scientific and societal applications: The data provide scientists with a unique source of information on topics ranging from behaviour of individual animals to changes in biodiversity, while the insights gained have the potential to inform decision making on environmental and conservation issues.

However, the full potential of PAM remains untapped due to the lack of automated analytical tools. Due to the complex and dynamic nature of marine soundscapes the analysis is usually performed by an expert human analyst or a carefully engineered and highly specialized algorithm, which requires significant effort to implement in the first place. This currently represents a bottleneck in the application of PAM and prevents large scale applications of the method.

Deep Neural Networks have proven highly successful in tasks related to image recognition, video segmentation, natural speech processing, and shown great promise in applications to marine acoustics. See for example Google’s recent effort to train a convolutional neural network to detect the songs of humpback whales. The successes of Deep Learning have, however, been contingent on the availability of very large evaluated data sets, which are used to train the neural networks. In marine acoustics the evaluated data sets are rarely large enough to train a Deep Neural Network from scratch. Yet, all hope is not lost. Perhaps, by allowing the network to work together with humans rather than seeking to replace them, we may still be able to leverage the power of Deep Neural Networks to learn from examples.

MERIDIAN is currently exploring this idea through the creation of a visual (inter)active learning application, which will allow the human analyst to inspect and correct the classifications proposed by the neural network. This, in turn, will allow the neural network to improve its performance as its pool of training data gradually augments. As the neural network becomes more confident in its predictions, the human analyst will be able to entrust more decisions to the network, greatly speeding up the overall analysis task. Such a positive feedback loop may allow neural networks to be trained more efficiently and save precious time for the data analyst, who will be able to analyze the data and verify the performance of the detector in a single setting. As a critical step towards this goal, we are currently exploring various training schemes to identify the most efficient learning strategy for the neural network, taking advantage of various data augmentation and transfer-learning techniques.

An interactive learning application based on Deep Learning could one day become part of the standard toolkit available to marine ecologists to analyze large bodies of acoustic data. However, the success of Deep Learning in marine acoustics will ultimately depend on the availability of large training data sets, shared freely between research groups in the community. More on this in another blog post…