Many marine species have evolved to rely primarily on sound for underwater navigation, prey detection, and communication. Therefore, marine biologists listen to the sounds generated by marine animals, for example, to detect the presence of an endangered species or to study social behavior. Increasing numbers of underwater listening and recording devices are being deployed worldwide, generating vast amounts of data that easily exceed our capacity for manual analysis. While algorithms exist to automatically analyze acoustic data in search for signals of interest, these algorithms often make many mistakes and generally do not achieve the same level of accuracy as human analysists.

In recent years, a new breed of algorithms known as deep neural networks have gained immense popularity, especially in fields such as computer vision and speech recognition where they outperform existing algorithms and even human analysts. Such deep learning algorithm exhibit an impressive ability to learn from (large amounts of) data, requiring far less feature engineering and making them suitable to cope with the increasing amounts of ocean acoustics data. Recently, such methods have been successfully applied to solve detection and classification problems in marine bioacoustics.

Motivated by these recent developments, MERIDIAN has developed a software package called Ketos that makes it easier for marine bioacousticians to train deep-learning algorithms to solve sound detection and classification tasks. We are also working closely together with researchers to develop detection and classification algorithms for selected marine species, including mammals and fish, and various types of motorized vessels.

Fish

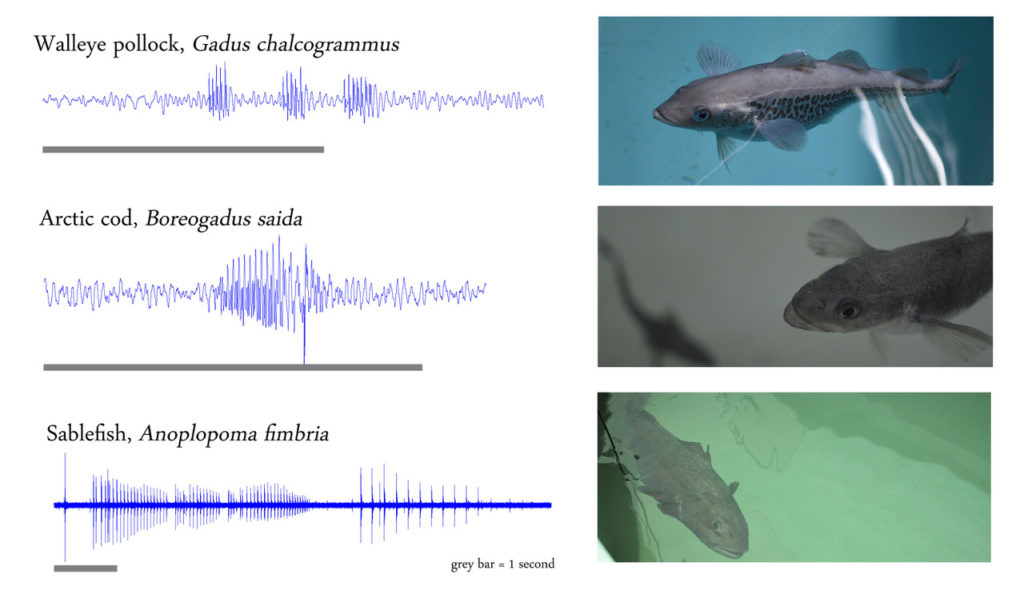

Hundreds of fish species are known to produce sounds. We are developing deep learning models to automatically detect fish in hydrophone data. Such models will help researchers to analyze large amounts of data that currently remains unexplored. The first species we are working with are the arctic cod and sablefish. These are a few of the species studied by Francis Juanes and Amalis Riera, biologists in the MERIDIAN team based at the University of Victoria, who closely guide us in our development efforts and work to gather the necessary data in natural and controlled environments.

Marine Mammals

Using a combination of convolutional and recurrent neural networks, we are developing deep learning models to detect and classify several whale species, including right, sei, fin and humpback whales. We collaborate with several groups and institutions that provide us with data and their expertise. Dr. Chris Taggart and Dr. Kim Davies from the oceanography department at Dalhousie University, for example, have been studying the endangered North Atlantic right whale using underwater acoustics and a variety of other methods. They provided data collected by autonomous underwater vehicles, which we are using to develop detectors that can be evaluated. Similarly, the MERIDIAN team leader at Rimouski Dr. Yvan Simard, and our collaborators at the Bedford Institute of Oceanography and Ocean Networks Canada are all providing data sets and guidance to make sure our efforts are well aligned with the needs of the underwater acoustics community.

Our first deep learning model, released in 2020, has been trained to detect the characteristic “upcall” of the endangered North Atlantic right whale. You can learn more about the model and its performance in the paper “Performance of a deep neural network at detecting North Atlantic right whale upcalls” published in the Journal of the Acoustical Society of America.

Motorized Marine Vessels

Aux États-Unis, une équipe de recherche dirigée par M. Neil Hammerschlag, Ph.D., de la Rosenstiel School of Marine and Atmospheric Science de l’Université de Miami étudie les effets de l’urbanisation côtière sur la répartition, les déplacements et la santé des requins. L’étude, connue sous le nom de Urban Sharks project, se concentre sur le nord de la Baie de Biscayne. Il s’agit d’une région fortement affectée par la population de Miami (2,5 millions d’habitants), notamment en raison d’un achalandage élevé de la navigation de plaisance.

Together with Ocean Tracking Network (OTN), Neil and his team have deployed a tracking array, which is being used to study the distribution and movements of individual tagged sharks. Moreover, they have deployed a number of broadband hydrophones, which they use to listen to underwater sounds, both man-made and from marine life.

MERIDIAN soutient Neil et son équipe dans l’analyse des données relatives aux hydrophones large bande et a développé un logiciel permettant de détecter le bruit sous-marin causé par les bateaux. Ce « détecteur de bateaux » a été appliqué avec succès pour quantifier l'activité de navigation de plaisance dans la Baie de Biscayne. Des données précieuses ont été recueillies à propos des perturbations causées par les bateaux. Ces dernières peuvent alors être comparées à l'habitat et au niveau d'activité des requins.

We are now working on extending the capabilities of our boat detector to be able to distinguish between different types of boating activity and different engine types. We will also be taking a closer look at the sounds made my marine animals in an attempt to quantify the biodiversity in the bay.

Hydrophone situé sur le fond sableux de la baie de Biscayne (à gauche) déploiement d’un hydrophone (à droite)

Kedgi – An interactive training app

One of the benefits of using deep learning to develop detectors and classifiers is that we can take advantage of the high plasticity of neural networks to create a model that can be tailored as needed. MERIDIAN is developing an application called Kedgi that will allow users to interact with a neural network as it is training, providing feedback on its performance and letting the model use the expert’s input to achieve better results. Starting with a pre-trained model, the user can apply it to a sample of a new dataset and evaluate its performance. In case the results are not satisfactory, the user can feed the model a larger amount of data and tell the model whether its outputs are correct. During this process, the application collect the user inputs to improve the model’s detection/classification abilities. This can be very useful when a pre-trained model performs very well in a given scenario (e.g.: detecting humpback whale in an area with shipping noise) but has its performance reduced in a new environment (e.g. an area with high level of seismic noise). With the help of the user, the model can adapt to the new environment more quickly. An important feature of this application is that it also works as an annotation platform, a common task in the bioacoustician’s workflow. This way a neural network can also learn by watching how the analyst does their work. The current version of Kedgi only provides a set of very basic functionalities, allowing users to run models on audio files and inspect the detections, but we are working hard to implement more interactive functionalities!

Ketos – A deep learning software library

Most of our tools are directed to users who don’t have advanced machine learning and software development skills: the pre-trained models and apps we are working on do not require any programming or advanced computer skills. But we also want to make sure the developers involved with underwater acoustic can take advantage of the work we do. That includes all the code we use to develop our models in an open-source library. It contains not only the neural network architectures that we find most useful for producing detectors and classifiers but also algorithms for data augmentation, utilities to deal with large datasets, several signal processing algorithms and more. Those who want to train a new model from scratch, try a variation of a network architecture or simply dig in the code base to see how things are done, can find out more here. Their developers will also find extensive documentation with tutorials, a testing suite, and instructions on how to contribute.